AI (artificial intelligence) changes the way of working in industries globally, changing health services through rapid diagnosis, supporting funding through analysis of predictions, improving e-commerce through recommendations and making the world of entertainment more intelligent and pleasant through recommendations. All of this is driven by an AI model (the AI model itself). The AI model is trained to detect patterns, analyse large volumes of data, and provide insights from that data.

However, many organisations don’t seem to pay attention to the true cost of that process. AI model training costs will always vary depending on the AI model and learning involved, the size of the datasets, the underlying infrastructure, and the energy involved. AI model training costs can be in the hundreds of dollars for training a basic machine learning algorithm and can escalate to millions of dollars for training a large language model (LLM) with billions of parameters.

For startup founders and anyone conducting research or corporate organisation executives, it’s important to at least consider this aspect in your strategic planning process. Failing to consider this can lead to underestimated expenditure causing budget deficits, delays in product launch, and/or unnecessary delays in the innovation process. However, startup founders and research scholars will benefit from just some careful thought around their existing performance as well as whether it is possible to leverage pre-trained models, cloud solutions, and data efficiency so that they might contain their expenditure and still deliver decent performance.

In this blog, we will highlight some of the key areas of budget considerations for AI model training, compare cloud-based and on-premises solutions, share some real-world examples, and offer insights into your expenditure and approaches to optimise ROI.

Why AI Model Training Costs Matter

The expenses for AI model training will always fluctuate depending on the degree of complexity, scope & familiarity. Basic models like linear regression or classification problems can use a few hundred dollars to invest in a model because they often only use a small amount of data and simple computation. However, complex and sophisticated deep learning models such as GPT, BERT, or image recognition models can reach millions of dollars in costs. These large-scale models use large training data sets, run on groups of powerful GPU or TPU-based technology, often take several weeks to train, and require tools for tools and engineering activities.

Therefore, if you are a startup, researcher, or workforce, it is worth understanding how training AI models is associated with costs because costs can be misestimated when training and can lead to budgets being exceeded, delays to projects, product launches being pushed back, and loss of market share in a competitive environment.

To avoid all this, organisations must carefully examine their requirements to choose the right approach:

- Training a model from scratch: Provides complete control but requires extensive resources, time and expertise.

- Finally, pre-trained models: a cost-graduated way of using existing architecture while using them for specific business needs.

- To rely on a cloud-based API: Ideal for small teams who want powerful AI skills without heavy training costs.

By understanding the business and closing between these alternatives, companies can coordinate innovation with financial efficiency.

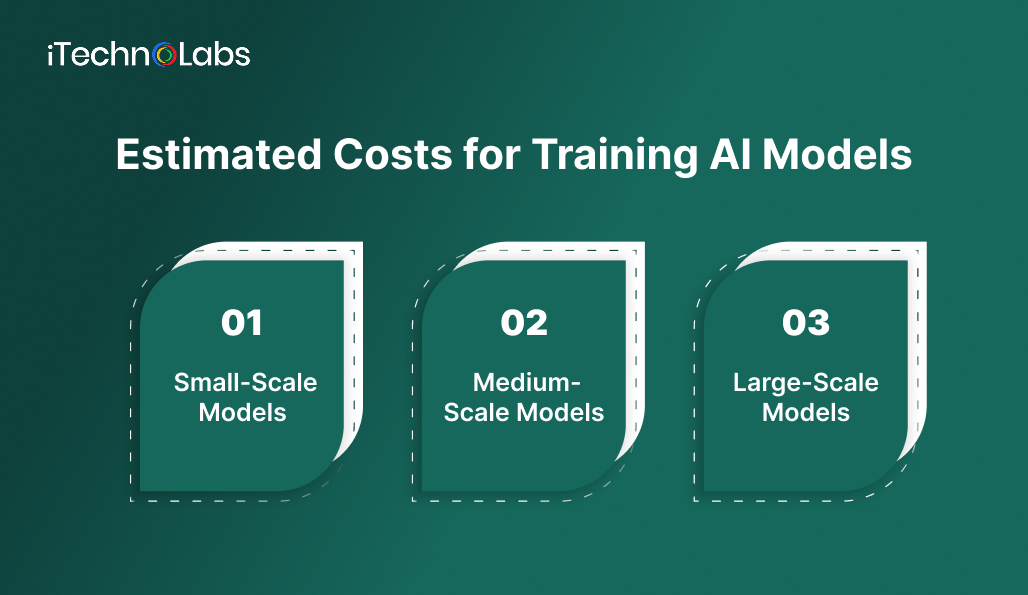

Estimated Costs for Training AI Models

The AI model training cost depends heavily on the size and complexity of the model. Let’s break it down by scale:

1. Small-Scale Models

- Example: Fraud detection with logistic regression or simple decision trees.

- Cost Estimate: $100–$1,000.

- Training Time: Minutes to a few hours.

- Resources Required: Basic CPUs or entry-level GPUs (sometimes even a personal laptop can handle it).

Lightweight models and popular for very specific problems, where datasets are small and feature engineering is simple. This is often the starting point for startups and academics, since it allows for affordable prototyping of ideas.

2. Medium-Scale Models

- Example: Image classification with Convolutional Neural Networks (CNNs) or natural language processing (NLP) tasks like sentiment analysis.

- Cost Estimate: $10,000 – $100,000.

- Training Time: Hours to several days.

- Resources Required: Multiple GPUs or mid-sized cloud training clusters.

These models are also more complex and usually require large data sets (hundreds of thousands for millions of samples) and more calculations. By using the GPU/TPU deposits, one of the cloud platforms (AWS, Google Cloud and Azure) will be the most likely training. The costs pile up rather quickly when you have to run repeated experiments, not to mention when you are trying to tune hyperparameters.

3. Large-Scale Models

- Example: Large Language Models (LLMs) like GPT, advanced computer vision networks, or generative AI systems.

- Cost Estimate: $1 million – $10+ million.

- Training Time: Weeks to months.

- Resources Required: Hundreds or thousands of GPUs/TPUs running in parallel across massive clusters.

These are highly sophisticated models that are trained on billions of parameters and trillions. As a reference, OpenAI is estimated to spend $4.6 million to train GPT-3, requiring special infrastructure and significant engineering support to keep it going. Although funding was available, these types of projects are usually only possible for well-funded companies or research organisations.

Cloud vs. On-Premises Training Costs

When considering AI model training cost, one of the biggest decisions organizations face is where to train their models cloud or on-premises infrastructure.

Cloud Training

- Pros:

- No upfront investment in hardware.

- On-demand scalability organizations can instantly spin up dozens of GPUs.

- Access to the latest GPUs/TPUs without worrying about upgrades.

- Cons:

- Costs grow rapidly with long-term or repeated training.

- Dependency on service providers and pricing fluctuations.

Example:

The cost of an AWS p4d example (8 NVIDIA A100 GPUs) is about $32.77/h. Run a medium-complex model 24/7 for 2 weeks, and the costs can be between $10,000 and $50,000. For large projects, costs can be in the hundreds of thousands.

On-Premises Training

- Pros:

- More cost-trained for organisations with frequent large-scale training.

- Hardware, complete control over privacy and compliance with data.

- Cons:

- High pre-investment servers with many GPUs can start from $50,000+.

- Internal expertise is necessary for setup, cooling, electricity and continuous maintenance.

For companies that produce AI on this scale, infrastructure for residents is often more economical after repeated use. However, it comes with important operating responsibilities.

Hidden Costs to Consider While Training AI Model

When budgeting for AI model training cost, it’s not just about GPUs and cloud bills. There are several “invisible” expenses that often catch teams off guard:

-

Data Preparation

-

-

- Collecting, cleaning, and labeling datasets can consume more time and money than the training itself.

- Manual annotation (e.g., labeling medical images) can cost tens of thousands of dollars.

-

-

Experimentation

-

-

- AI development is iterative—multiple training runs are needed to optimize accuracy.

- Each experiment compounds compute costs, especially for larger models.

-

-

Monitoring & Maintenance

-

-

- Models degrade over time (a concept known as model drift).

- Continuous retraining and monitoring tools add recurring costs.

-

-

Compliance & Security

-

- Handling sensitive data (like healthcare or financial data) requires compliance with regulations such as GDPR or HIPAA.

- Security tools, audits, and encryption measures all contribute to hidden costs.

The AI model training cost is not just about hardware it’s an ecosystem of expenses involving data, infrastructure, experimentation, compliance, and maintenance. A small project might be affordable with cloud GPUs, while enterprise-level AI can easily require millions in investment.

Suggested Article: Software Architecture: 5 Principles You Should Know 2025

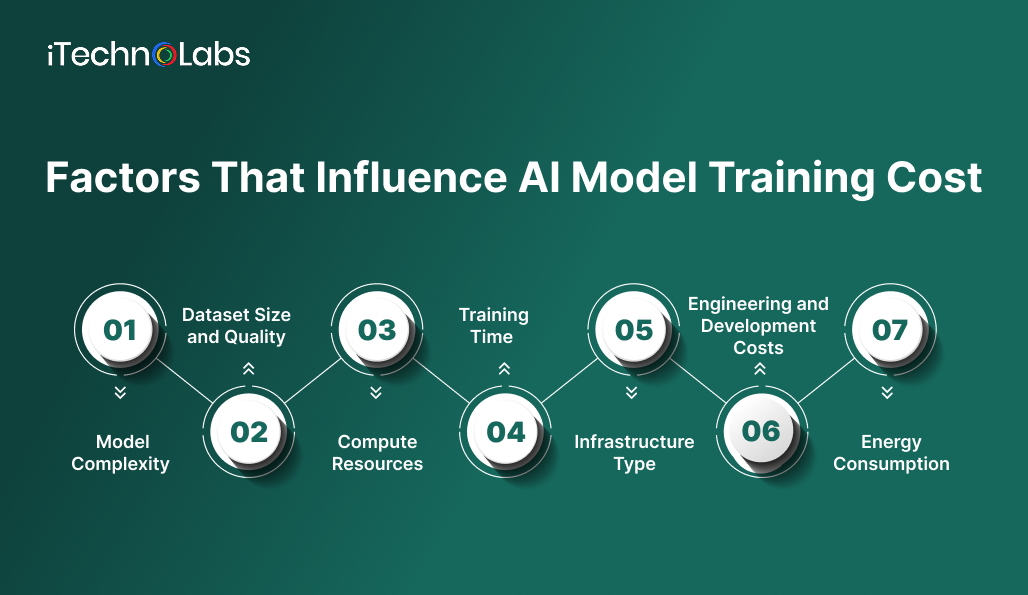

Factors That Influence AI Model Training Cost

Training an AI model is not just about feeding data into an algorithm. Several key factors determine the overall cost:

1. Model Complexity

- Small models: Basic machine learning models (for example, decision trees, linear regression or logistic regression) can be trained with smaller hardware and minimal expenses.

- Deep learning model: Complex neural networks, especially with millions of or billions of parameters (such as LLM or Computer Vision System), require significantly more resources.

2. Dataset Size and Quality

- More data leads to better results but also increases training costs.

- Costs include data collection, cleaning, labelling, and storage.

- High-quality, domain-specific datasets often need manual labelling, which adds substantial expense.

3. Compute Resources

- Training requires high-performance GPUs or TPUs.

- Cloud providers like AWS, Google Cloud, and Azure charge per hour for GPU/TPU usage.

- Specialised hardware accelerators can reduce training time but increase upfront cost.

4. Training Time

- Training can last hours, days, or even weeks depending on the model.

- Longer training means more compute usage, which directly increases cost.

5. Infrastructure Type

- Cloud-based Training: Pay-as-you-go model but can get expensive for large projects.

- On-premises Hardware: High upfront investment but lower long-term cost for repeated training.

6. Engineering and Development Costs

- Salaries for data scientists, machine learning engineers, and DevOps specialists.

- Time spent optimizing algorithms, tuning hyperparameters, and testing.

7. Energy Consumption

- Training large models consumes enormous amounts of electricity.

- This translates into both monetary cost and environmental concerns.

How to Reduce AI Model Training Costs

It is important to manage AI model training cost-effective for startups and companies, especially for start-up and companies, which must balance innovation with a lack of budget. Fortunately, there are proven strategies that can be used to cut expenses without leaving organisations’ results.

1. Leverage Pre-Trained Models

Training a model from scratch is often the most expensive option because it requires large data sets and calculates resources. Instead, the organisation can use the learning of pre-trained models such as BERT, ResNet, or GPT variants and fix them for their specific use.

- Cost Reduction: Up to 90% savings compared to full-scale training.

- Example: A chatbot producer does not need to train a large language model from scratch; this can fix an existing LLM on the industry-specific dataset for a fraction of the costs.

2. Efficient Data Management

Data plays a huge role in determining AI model training cost. While large datasets improve accuracy, they also inflate costs.

- Use smaller but higher-quality datasets instead of massive, noisy ones.

- Employ synthetic data generation when real data is scarce or costly. For example, in healthcare imaging, synthetic X-rays can supplement training sets.

- Data augmentation techniques (flipping, cropping, rotating images) can expand datasets at almost no cost.

3. Optimize Hyperparameters

Hyperparameter tuning (adjusting learning rates, batch sizes, layers, etc.) can take dozens of trial runs, each adding to training cost.

- Automated solutions like AutoML, Optuna, or Hyperopt can streamline this process.

- Bayesian optimization and reinforcement learning methods can reduce the number of failed experiments.

- The result is faster convergence with fewer wasted compute hours.

4. Use Spot Instances in Cloud

Cloud providers like AWS, Azure, and GCP offer spot instances (unused compute capacity sold at a discount).

- These can be 50–70% cheaper than on-demand rates.

- Example: Instead of paying $32/hour for an AWS GPU instance, a spot instance might only cost $10–15/hour.

- Risk: Instances may be interrupted if demand spikes, but for non-critical training runs, the savings are substantial.

5. Distributed and Parallel Training

Large models often take weeks to train, but distributed training frameworks like Horovod or PyTorch Distributed can divide tasks across multiple GPUs.

- This shortens training time significantly.

- Since cloud and on-premises GPU rentals are billed per hour, faster training directly reduces costs.

- Example: Cutting a training cycle from 2 weeks to 5 days means thousands of dollars saved in GPU fees.

6. Regular Model Updates (Incremental Training)

Instead of retraining from scratch every time data changes, organisations can use incremental learning or continuous fine-tuning.

- This approach updates models with new data in smaller batches.

- Saves compute time and energy, making it more cost-efficient.

- Example: An e-commerce recommendation engine doesn’t need full retraining; it only updates based on new customer behaviour.

You can reduce the AI model training costs by using smart strategies such as using pre-trained models, optimising data and hyperparameters and taking advantage of shelter organisations while you are still achieving high performance results.

Future Trends in AI Model Training Costs

The cost of training AI is expected to change due to:

- Advances in Hardware – More efficient GPUs/TPUs will lower costs.

- Better Algorithms – Techniques like parameter-efficient fine-tuning (PEFT) reduce compute requirements.

- AI-as-a-Service – Increasing reliance on subscription-based AI APIs.

- Green AI Movement – Emphasis on energy-efficient training to reduce environmental costs.

Also, read: Machine Learning Security and Surveillance Systems: Smart Solutions 2025

Conclusion

Training an AI model can vary from a few hundred to millions, depending on a model’s size, quality of data, what infrastructure is used, and the organisation’s engineering capabilities. Small-scale models could probably just take a few hundred dollars, whereas advanced systems, including large language models, require massive compute resources and even inefficient hardware, ballooning costs to millions.

For most organisations, training from scratch is seldom the best way to go. Use existing (mostly free) models, slightly adjust them for a task, and pay (a lot) less by utilising a cloud-based solution. These can balance scale versus cost much better, and you can work with existing datasets. Pulling everything together with good data management practices, hyperparameter approaches, and intelligently designed cloud pricing models can help in shaving down overall costs.

The point? Even though the cost of AI model training can be quite variable, an organisation with a plan in place can help control costs and still benefit from the power of artificial intelligence.

FAQs

1. What is the average AI model training cost?

AI model training cost ranges from a few hundred dollars for small models to millions for advanced large-scale systems, depending on data size, compute resources, and complexity.

2. Why is training AI models so expensive?

Costs arise from high-quality datasets, powerful GPUs/TPUs, long training times, and engineering expertise. Together, these factors significantly increase AI model training cost, especially for large, cutting-edge models.

3. Can cloud services reduce AI model training cost?

Yes, cloud services offer scalable resources without upfront investment. Using spot instances, pre-trained models, and optimized workflows significantly reduces AI model training cost for startups and enterprises alike.

4. How do pre-trained models help lower costs?

Pre-trained models eliminate the need to train from scratch, allowing businesses to fine-tune existing architectures, cutting compute hours, and reducing AI model training cost by up to 90%.

5. What hidden expenses affect AI model training cost?

Beyond hardware and compute, hidden costs include data labeling, experimentation, compliance, and model maintenance. These often exceed initial estimates, making accurate budgeting for AI model training cost essential.